Bhattacharya – HW for Rapidly Solving High-order Optimization Problems

PhD student Tinish Bhattacharya leads development of architecture to compute high-degree polynomial gradients in-memory

From the UCSB The Current article "Innovative Hardware for Rapidly Solving High-order Optimization Problems"

The rise of AI, graphic processing, combinatorial optimization, and other data-intensive applications have resulted in data-processing bottlenecks, as ever greater amounts of data must be shuttled back and forth between the memory and compute elements in a computer. The physical distance is small, but the process can occur billions of times per second, so the energy and time required to move so much data adds up. In response, computer engineers are working to design specialized hardware accelerators having innovative architectures to improve the performance of such applications.

Prior efforts to develop hardware for accelerating solutions to optimization problems have involved Ising machines, a category of hardware solvers that incorporate the Ising model to find the absolute or approximate ground state — i.e., the energy minimum. Until now, hardware architectures for Ising machines could efficiently solve problems with quadratic polynomial objective functions but were not scalable to increasingly relevant higher-order problems (those having a polynomial objective function with order greater than two), which arise in many industrial and scientific scenarios, such as protein folding, electronic-structure prediction, AI-model verification, circuit routing, fault diagnosis, and scheduling.

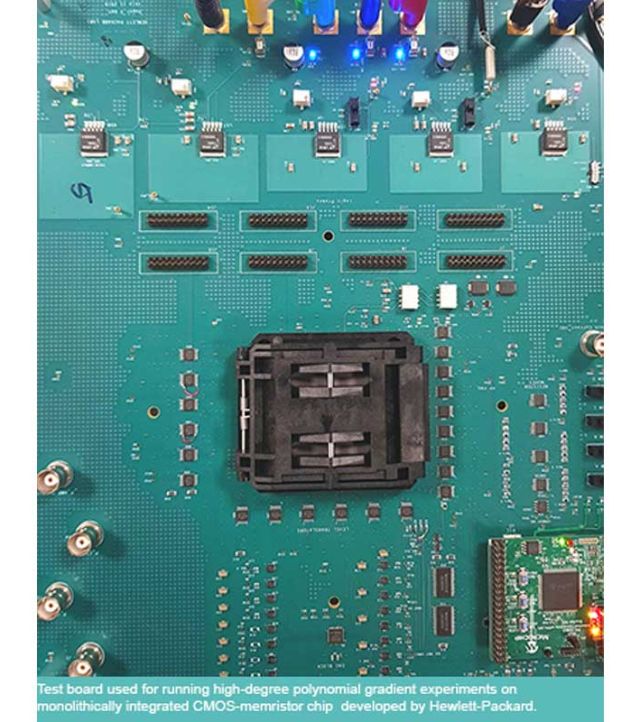

Tinish Bhattacharya, a sixth-year PhD student in the lab of electrical and computer engineering professor Dmitri Strukov, conducts research in this area. He and several industry collaborators, along with academic colleagues in Europe, and industrial collaborator Hewlett Packard Labs, have developed specialized function gradient computing hardware to accelerate the rate at which complex high-order optimization problems can be solved. A paper describing the work, titled “Computing High-degree Polynomial Gradients in Memory,” appeared in the Sept. 18 issue of the journal Nature Communications. In November, the paper was selected by the Nature Communication editors to be featured in the Editor's Highlight pages, which showcase the fifty best recent papers in a given area of research.

The authors write in the paper abstract, “We propose an approach for massively parallel gradient calculations of high-degree polynomials, which is conducive to efficient mixed-signal in-memory computing circuit implementations….”

Bhattacharya uses a visual image to begin unpacking that statement. “You can imagine that the objective function of any optimization problem, such as an AI workload, represents an N-dimensional ‘energy landscape,’ where each combination of variable values represents a unique point in that landscape,” he says, “and the goal is to find the set of variable assignments that corresponds to the lowest (or more generally, as close as possible to the lowest) point in that landscape.”

By way of a parallel, he suggests an actual landscape, “Imagine yourself high in the Sierra Nevada mountains, and your objective is to find the lowest point in a given area, as quickly as possible and with the least possible effort. To achieve that, obviously, you will follow the steepest downward slope. The information about the steepness and the direction in which the steepest slope lies with respect to where you are standing is given by the function’s gradient at that point. You proceed by taking incremental steps and recalculating the gradient after each one to confirm that you’re still on the steepest slope. This example posits a three-dimensional landscape that could be represented by x, y, and z axes, and the gradient calculation is relatively simple. Practical optimization problems, however, may have hundreds of thousands of variables.”

“Other nuances are associated with the search process, for instance depending on where you start, you may arrive at a local low point, but one that is not the lowest point in your search area. There are different ways to approach that — to escape a low point that is not the lowest point — but the key idea is to be able to restart by jumping to a different start point and then performing gradient descent again. Therefore, the gradient calculation operation is performed iteratively, over and over, and we need to be able to do it fast and efficiently.”

The energy minimum can represent different things, depending on the problem. In the Sierra example, it is the lowest point. In the classic traveling-salesman problem, it would be the sequence of stops that requires minimum time to cover a territory. The traveling-salesman problem has a second-order polynomial objective function, such as x1x2 plus x3x4 plus x5x6, where x1x2 is a monomial, and the function is of order two, since it has a maximum of two variables across all monomials. A wide range of industrially relevant combinatorial optimization problems, however, including the Boolean Satisfiability, have higher-order polynomial objective functions (any order greater than two).

In contrast, he adds, much of the currently proposed, state-of-the-art hardware for solving these kinds of problems are limited to second-order problems. “They can solve a traveling salesman problem, but if you give them a Boolean satisfiability problem, they can’t do it in the native high-order function without first reducing it to a second-order one. That not only involves pre-processing time and energy, but also adds many extra variables to the original objective function, increasing exponentially the time to solve such problems.

“The main benefit of our hardware,” Battacharya continues, “is that it can solve problems like Boolean Satisfiability in their native high-order space without having to do any pre-processing, potentially providing exponential speedup over current hardware architectures that are limited to second-order objective functions.”

How They Do It

A key element of the new hardware is its ability to perform in-memory computing, within the memory array itself, mitigating the memory-compute bottleneck that results from moving vast amounts of data back and forth between memory and processor in a classic computer. The researchers accelerate operations by performing matrix vector multiplication, the mathematical operation behind the gradient-computation step, by using crossbar arrays of memristor devices, an important specialized memory device.

To perform vector-matrix multiplication in a general-purpose computer, the processor reads the first element of the vector and the first element of the first column of the matrix, both of which are stored in the memory, and then computes their product and stores it in a different memory. The processor then repeats the process for the second element of the vector and the first column of the matrix. When a column is completed, the processor asks for all of the stored products, adds them up, stores the result, moves to the next column, repeating the process. The time required to solve the problem depends on the size of the matrix. The larger the problem, the larger the matrix, resulting in more time spent shuttling data to and from memory. The great advantage of in-memory computing is that this operation can be done in a time independent of the size of the matrix. It always requires only one step, with no shuttling of data back and forth, dramatically reducing the time to solve.

The hardware consists of crossbar memories — actual raised surfaces lithographed onto the chip — where several word lines (wires) run horizontally and several bit lines run vertically. Placing a memristor at every location where a word line and a bit line intersect, with one terminal of the device connected to the word line and the other to the bit line, forms a memristor crossbar array. The matrix encoding the problem is stored in the states of these memristors. The vector is applied as proportional read pulses on the word lines. The resulting currents, which flow in the bit lines, then depict the result of the vector-matrix multiplication.

The core innovation that enables gradient computation of high-order polynomials in the native (high-order) space is using two such crossbar arrays back to back. Both crossbars store the matrix depicting the high-order polynomial. The first crossbar computes the high-order monomials of the polynomial. The second crossbar uses this result as its input to compute the high-order gradient for all the variables in each of its bit lines.

This “massively parallel” element of the group’s approach is key to their success. “By that, we mean that our hardware can compute the gradients for each of those variables at the same time, rather than sequentially, as a lot of current hardware does. That's the optimization, in one respect, the fact that we have retained that massively parallel property even when going to that high-order space.”

From an algorithmic point of view, the ability to optimize a native high-order function, as opposed to the reduced second-order version, can result in a speed advantage of nearly two orders of magnitude for problems having only 150 variables. That is still an order of magnitude smaller than most practically relevant problems encountered in real-world scenarios, and the speed advantage is expected to increase exponentially with the addition of more variables.

The UCSB Current – "Innovative Hardware for Rapidly Solving High-order Optimization Problems"